Tech Blog

Why you should care about on-manifold explanations

At Faculty, our dedicated R&D team has spent the last couple of years focusing on AI safety problems that arise from the application of present day technologies. We’ve divided these problems into four broad categories: explainability, fairness, robustness and privacy. It’s important to consider each of these categories when training machine learning models in a responsible way, but in this blog post we’re going to single out explainability.

Explainability refers to the study of tools and methods for understanding how machine learning models make decisions – we’ve written about it a few times before on the Faculty blog. There are lots of different approaches, but we’ve settled on Shapley values as our preferred option, because they are model agnostic, interpretable, and built on a theoretically principled foundation from cooperative game theory. However, open-source implementations of Shapley values are not perfect; much of our research into explainability has focused on how we can address some of the deficiencies.

One of the key issues with existing implementations of Shapley values is the problem of evaluating the model on fictitious data. To understand why this happens and why it’s a problem, it’s helpful to understand a little bit about how Shapley values are calculated.

The data manifold problem

Shapley values, as applied to a machine learning model, are used to measure how much each feature contributes to the model prediction. To calculate this, the Shapley framework requires that we evaluate the model on subsets of its features. However, generally it’s not possible to evaluate machine learning models without providing values for all the expected input features. To account for this, instead of omitting features, we marginalise or average over them; that is, we hold fixed the features we want the model to use, and measure the average output of the model as the remaining features vary.

Naïve approaches to this vary the remaining features by simply filling in the non-fixed features using values sampled at random from the dataset. One can then repeat this process a number of times and average the output. When one does this though, one implicitly assumes that features are independent of one another – a dangerous assumption that almost never holds.

For example, consider a set of data points that measure the age and driving experience of different individuals. Most people learn to drive at about the same age, so the data points will be concentrated around a line. If we were to hold age fixed and sample new values for driving experience without taking into account the correlation between them, we might end up with a 20 year old with 12 years of driving experience (unlikely) or even with 21 years of driving experience (impossible). We shouldn’t expect the model evaluated on such unrealistic data points to result in reasonable behaviour, or behaviour that is typical of the model as a whole. Such fictitious data will affect the average and damage the explanation.

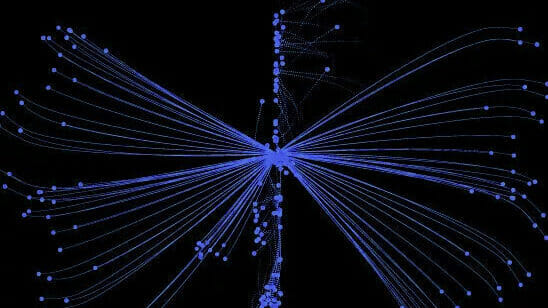

This is an example of a broader phenomenon: high dimensional data often concentrates around a lower dimensional subspace. In the example above, 2-dimensional data (age, driving experience) concentrates around a 1-dimensional line. In general, we call this lower dimensional subspace “the data manifold”.

A manifold is a mathematical generalisation of a curve or surface to higher dimensions, i.e. to data with many features. Evaluating the model away from this data manifold leads to uncontrolled behaviour that is not informative of how the model will behave on realistic data. As a result, off-manifold explainability techniques often provide misleading interpretations of the model’s decisions.

How do we develop explainability tools that respect the data manifold?

Faculty has developed methods for explainability that respect the data manifold, allowing us to give the most accurate possible explanations. We’ve done so in two different ways.

The first way is to directly tackle the problem of sampling values for the non-fixed features. Rather than sampling them at random from the data, we would ideally sample them conditionally based on the fixed values. For most datasets it’s not practical to work with empirical conditional distributions, so we use probabilistic generative models, specifically a Masked VAE, to learn the conditional distribution of the data and fill in missing values. In our earlier example this would mean that, had we fixed the age of the driver, the Masked VAE would allow us to sample values for driving experience that are reasonable given that fixed age, based on what the model has observed in the data.

The second approach is slightly different. Instead of learning to approximate the conditional data distribution, we learn to approximate the average value of the model evaluated on the fixed subset of input features. We do this by training a surrogate model that only takes the fixed feature values as input. The surrogate model then attempts to predict the average output of the original model that operates on the full set of features.

Both of these approaches are practical, scalable ways to ensure that model evaluations stay on-manifold when computing Shapley values, thereby increasing the quality, and therefore utility, of explanations.